ai slave

It took me nearly two years, but I’m finally taking AI animation seriously. I held off all this time, not because I wasn’t curious, but because the tools weren’t ready and neither was I.

The tech was limiting, workflows were chaotic, and burning credits on 5-second clips that led nowhere felt wasteful.

The second challenge? The process. Generating hundreds (sometimes thousands) of images just to get a handful that actually worked for a few seconds of animation sounded overwhelming to me, and not a great use of time.

The third? Merging consistency with continuity. Despite improving my technical skills for consistent creation, that consistency was not transferable across multiple frames or scenes, and not satisfactory enough for animation.

The turning point came when the right combination of tools and workflow finally emerged.

The tools got smarter. I got better at using them. The process is now faster. The results are finally consistent and continuous, and if you know what you’re doing, achievable with minimal resources in less time.

All thanks to some platforms that have really stepped up their game, especially OpenArt AI, which has been my go-to powerhouse for almost two years. I initially turned to it as a travel-friendly alternative to my Automatic 1111 locally run workflow.

From back then, OpenArt already had everything I needed to keep on rolling with my AI creations. Stable Diffusion, LoRA training, inpainting, face editing, and most importantly, they always stood up to date with the latest generation models. That included the Flux suite, especially the latest Flux Kontext, which without knowing was going to be the crucifix that I needed to put an end to the monster of scene continuity in AI animation.

If you’ve been around long enough, you know the curse of consistent character design in AI generation, get one great image, try to recreate it from a different angle, and suddenly you’re dealing with a shapeshifting mess. Armor morphs. Faces change. Proportions betray you.

Flux Kontext: Design That Holds Up Across Angles

But with Flux Kontext, that nightmare finally ended.

This model isn’t just about style, it’s about structural memory. It holds on to geometry, lighting, texture, and silhouette in a way I hadn’t seen before. I could take my main character, a Viking Berserker in this case, turn him 45°, then 90°, then 180°, and the model understood it was the same person, same horns, same armor plates, same axe, just from another angle.

That alone saved me dozens of hours and spared me from having to retouch or regenerate endlessly.

The Chat Feature: Art Direction With a Dialogue Box

Then there’s the Chat feature, which I didn’t expect to love as much as I do.

Instead of engineering 20 prompts for slight variations, I used Chat like an art director. I’d upload an image and say:

“Give me a close-up view of the Viking.”

“Change the axe to a sword.”

“Change the axe to a gun.” (Not sure that one belongs in an RPG, but we never know, it if I choose one day choose to add elements of time traveling to the story)

The Chat feature delivered quickly and with high fidelity. It felt like working with a focused assistant who understood context, visual logic, and my style preferences.

A Sword That Cuts Through Art & Scene Design

For a long time I was a hardcore Stable Diffusion user, on a quest of consistency and control, until Black Forest Labs released their Flux Pro model, and OpenArt had it integrated in their platform. I started using Flux Pro a few months ago to illustrate a series of dark fables I’m writing. I ended falling on a particular art style that immediately felt like my kind of world. It became a bit of a signature style, and since then I have used it across multiple projects, including a few of my AI music albums produced with Suno.

One of those albums is Skaalven Dovhkaar, a fantasy soundtrack inspired by my love for Skyrim and the genre as a whole.

This soundtrack, and the story behind it, set the tone for this animated clip.

Using Flux Pro, I created snowy village and forest scenes in that same visual style. Then, using Flux Kontext, I composited the Viking into those scenes, making sure lighting, shadows, and environment matched.

This separation of steps, first designing the character in a controlled space, then placing him into the environment, was the missing piece to build AI animations that felt more intentional and directed, and less like a random disjointed slideshow.

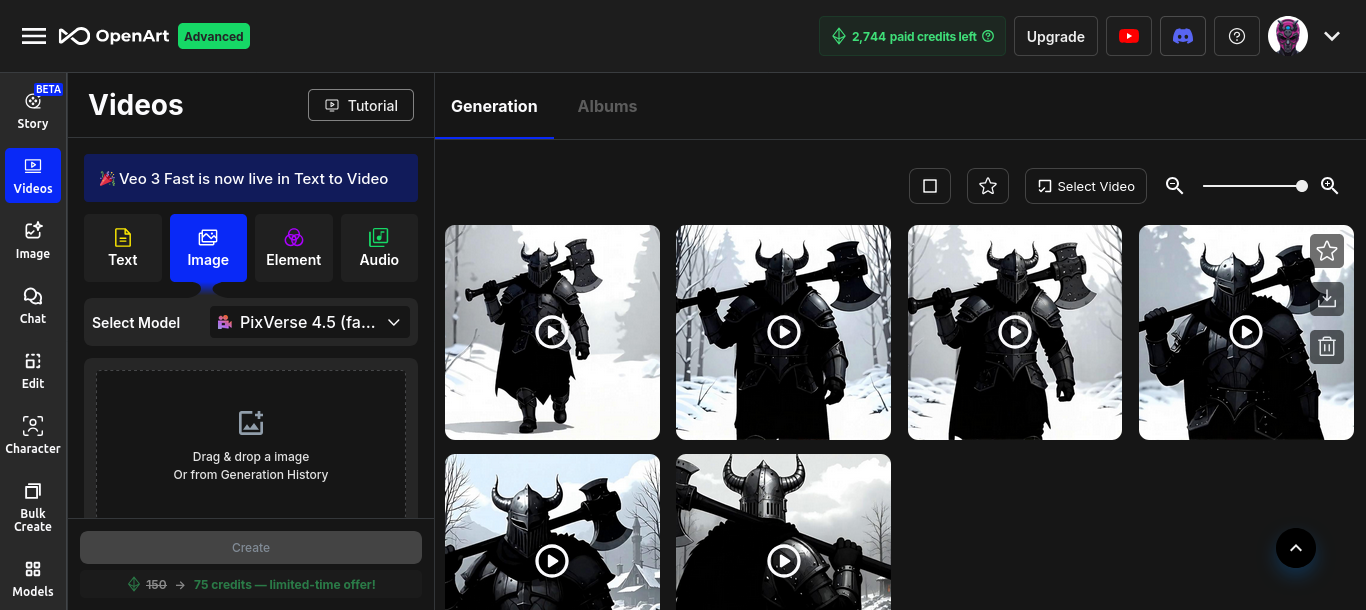

This is where things get kinetic, where still frames begin to breathe using the video generation feature inside OpenArt itself, a full-fledged and loaded arsenal for the AI video creator.

We’re talking Kling AI, Google’s Veo (Veo 2 & 3), my now personal go-to, PixVerse 4.5, including other popular models like MiniMax Hailuo 02 and the latest Seeddance 1.0.

Kling AI: The Forgotten King

When I first jumped into AI video, Kling AI was my primary weapon of choice. It was new, exciting, and reasonably good at preserving character structure. For a while, it was also the cheapest option.

It taught me a lot, and helped me refine my process for bringing images to life with AI. And for the most part, it did the job.

Veo3: The Dead King

Then came Veo2, I tried it when it was on a limited-time offer at launch on OpenArt. Great value but still was not ideal for my stylized work. Veo3 arrived, 5 times the cost of Veo2, carried by Google, influencers, trailers, and headlines.

The reality? For me, it was hype over substance.

As someone with a background and deep expertise in SEO, I know Google’s ecosystem all too well. I’ve seen the same pattern in SEO, break rankings with algorithm “updates,” then sell visibility through ads. Veo3 felt like the same marketing playbook, expensive, overhyped, and irrelevant to my use case.

When limited on resources, use your brain. That’s how I became a proficient Black Hat SEO, and also how I intend to approach my AI creative journey.

PixVerse: The New King

And then, I stumbled onto PixVerse. And that changed everything.

There’s a primary reason I gravitated toward PixVerse. It had fidelity. Not just visual fidelity, but style fidelity to my art style. When I gave it a frame of my Viking walking through snow-covered forests in my art style, it didn’t “fix” my style into realism or generic outputs.

To me it felt like PixVerse looked at my Viking, walking through a snow-covered forest, and said:

“Yeah, I get what you’re going for.”

And it respected it. That alone should be enough, but here’s the kicker, it’s fast and way cheaper than Veo 3. Two words every animator (AI or traditional) wants to hear.

Time is the invisible budget. The one cost no one accounts for until it’s bleeding them dry. If you’ve ever spent hours on a render just to realize a small detail on the shoulder armor plate is not symmetrical, is in the wrong place, or the background melted into fog, you know what I’m talking about.

Now scale that up to AI animation. Each clip, 3 to 5 seconds. Dozens of variations. Fixes. Reruns. If your model takes 5 minutes per generation, you’ll burn your creative energy (and credits) before you even hit the export button.

PixVerse is quick. Consistently under one minute for my style-heavy clips. That meant I could stay in flow. Generate, preview, regenerate if needed, without burning out or breaking my rhythm.

And let’s not underestimate price. If you’re running on a budget, and let’s be honest, even pros are budgeting AI credits these days, PixVerse gives you the most quality per credit spent. Especially when you’re pushing for scene continuity across multiple frames and shots, not just flashy random single moments.

At the time of writing, on OpenArt for around $30/month (annual plan) and 24000 credits, you get:

9 Veo3 clips at 2400 credits per clip

120 Kling AI 10-second clips at 200 credits per clip

160 PixVerse 8-second clips, currently on a time-limited offer of 150 credits per clip. 80 clips at 300 credits when the offer is over.

The choice for now was obvious.

And now… came the question that I wanted to find an answer to.

I had the tools, the technical skills, the art style, the story, the music and the frames ready.

The one question that has been bother me for a while remained. Can AI hold a scene together for longer than a few seconds without breaking continuity or coherence?

Historically, the answer has been no.

For most animators working with AI, that’s still the elephant in the room. Until now, the standard approach with animation (both traditional and AI) has been using, beautiful stills, moving from one to the next within just a few seconds, usually no longer than 10 seconds. That’s fine, often this approach is necessary to give the viewer multiple perspectives of what is happening on the moment in the scene.

It gets the job done, it makes money, loads of them and a lot of time more than what live action movies. The top 10 of the highest grossing Japanese movies of all time, are all animation in a similar approach, with movies like Demon Slayer Mugen Train sitting at number one with a box office revenue of around 500 millions USD for a budget of around 15 million.

But it’s not groundbreaking, or game-changer like how influencers call it, andfar from what AI is supposedly set to accomplish. It’s not continuity, and for what I had in mind, it would be like trying to walk through Skyrim but only being shown postcards.

Then, OpenArt invited me to beta test something called Story.

One Builder To Rule Them All

Imagine this as an animator:

You upload a sequence of image frames or video clips, generated in OpenArt or elsewhere.

You arrange them like a storyboard, and drop them into a timeline.

You upload and sync with your own music.

You preview the cuts, adjust pacing, and render it all into cohesive video that you can export or share with a link.

All in one place. No expensive third-party glue required.

The Story feature is a strategic response to a core AI art problem, fragmented workflows. Until now, making a short film with AI meant juggling multiple different tools, images generation in one, video generation in another, editing somewhere else in another, editing in another, and then praying it all stitched together cleanly.

Now, OpenArt is taking that burden away from me, like they have always done in the past, similar to a character in some RPGs, helping me, the main character achieve some important quest. In my case, to become one of the most versatile and consistent AI creators on the planet.

The Main Quest

With the tech stack in place, Flux Pro for art style, Flux Kontext + Chat for character design, PixVerse for animation, and Story for the assembly, I was ready to create something that years ago felt very much out of reach.

A 1-minute long, continuous AI-generated scene that didn’t fall apart stylistically and structurally. A sequence that could actually hold direction, coherence, and continuity.

I chose to create a music video around one of my compositions from Skaalven Dovhkaar, a dark, ambient composition rooted in the same mythology that inspired the character himself.

The scene is simple, deliberately so:

A lone Viking walks away from his village.

Snow falls. The wind howls through crooked pine trees.

Some fog prevails.

The fires of the settlement flicker behind him as he descends into the unknown.

There are no battles. No dragons. At least not yet.

Just movement. Space. Resolve.

The visual pacing had to match the rhythm of the track.

The goal was not to overwhelm the viewer with cuts or effects, but to create the weight of a departure. A journey. A shift from one phase to another, much like the journey many AI artists are experiencing now as we transition from single-frame curiosities to actual storytelling.

Each sequence had to match not only the art style, but also maintain character fidelity, camera logic, atmospheric continuity, and environmental changes.

The Side Quest

I went into this project with two very clear and realistic objectives:

Achieve a visually acceptable level of scene and character continuity across more than one minute of animation, using nothing but AI tools currently available to the public.

Do it with the absolute minimum budget possible, translating to the lowest credit cost and time investment without compromising too much on visual fidelity.

That was it. No convoluted pipelines or expensive software licenses. Just my skills, the tools, and the track.

While OpenArt supported me with credits during the Story beta, the full process, generation to animation to final render, used fewer than 50,000 credits.

That’s about $60 USD on an annual OpenArt plan.

But let me be very clear, this isn’t one of those “I did in 60 bucks what studios do for $60,000” posts.

That’s not how production is measured.

That $60 reflects the tool cost only.

It doesn’t account for:

Dozens of hours producing the video.

Hundreds of hours mastering the tools and refining my workflow.

Thousands of hours immersed in animation, storytelling, world-building, and games, cultivating the mental library that makes these visuals possible.

So are the results perfect? Absolutely not.

Some transitions between frames need more adjustments. The lighting didn’t always stay uniform between shots. The armor and scene warped a few times. The walking motion still had that AI-generated stiffness we’ve all come to recognize.

But for only $60 spent on AI tools for such a render, I would call it a huge leap forward. Especially in a space where the running idea is that such results are aachievable only with more expensive models like Veo 3.

The real cost, though, was time, mastery, curiosity, and creative mileage. And that is something you can’t buy with a subscription. The kind of cost that’s invisible in the export window, but impossible to fake.

One of the most important lessons I’ve learnt since working with generative AI for the last 3 years is to;

“Let go of the expectation of perfection, focus instead on creating a prototype for the future.”

It’s a philosophy I picked up from Hideo Kojima, creator of the video game series Metal Gear Solid and also my favorite game director, who once said;

“Game industry’s always been depended on the technology. Technology evolves on daily so as hardware & software. That’s why you need to keep running. The one who runs at his/her best, the one who runs strategically, the one who runs on his/her pace, the one who seeks opportunity while running in the 2nd pack. You don’t need to be in the 1st pack all the time.”

Whether it’s gaming or AI, when you work with constantly evolving technology, you’re not just building things, you’re co-evolving with the tools. And that means friction. Broken outputs. Dead ends. Creative sparks that vanish in a puff of RAM.

Now, when something doesn’t work, I archive it. I throw it into my “creative dumpster”, not as trash, but as compost for future growth. A seed bank. A waiting room for ideas, and I have loads of them, ahead of their time, waiting patiently to be brought to life.

Because I know, either the tools will catch up, or my skills will. Today was one of those moments.

I took an idea off the shelf, and it came to life. That’s not just a personal win. That’s a proof of evolution, for both the technology and the human behind it.